In May 2025, the editorial team of the Israeli Russian-language outlet Newsru.co.il noticed numerous strange posts appearing simultaneously on several Facebook pages. Each publication contained a short biography of a Holocaust victim and a portrait. It turned out that the images were generated using artificial intelligence, and the captions were mostly fabricated. Using this example, “Provereno” explains how neural networks (and flaws in Facebook’s monetization programs) help scammers profit from history enthusiasts and why the very structure of social networks not only fails to prevent such schemes but encourages them

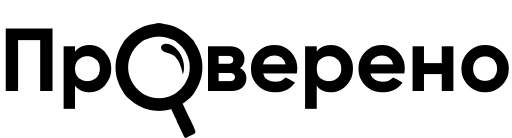

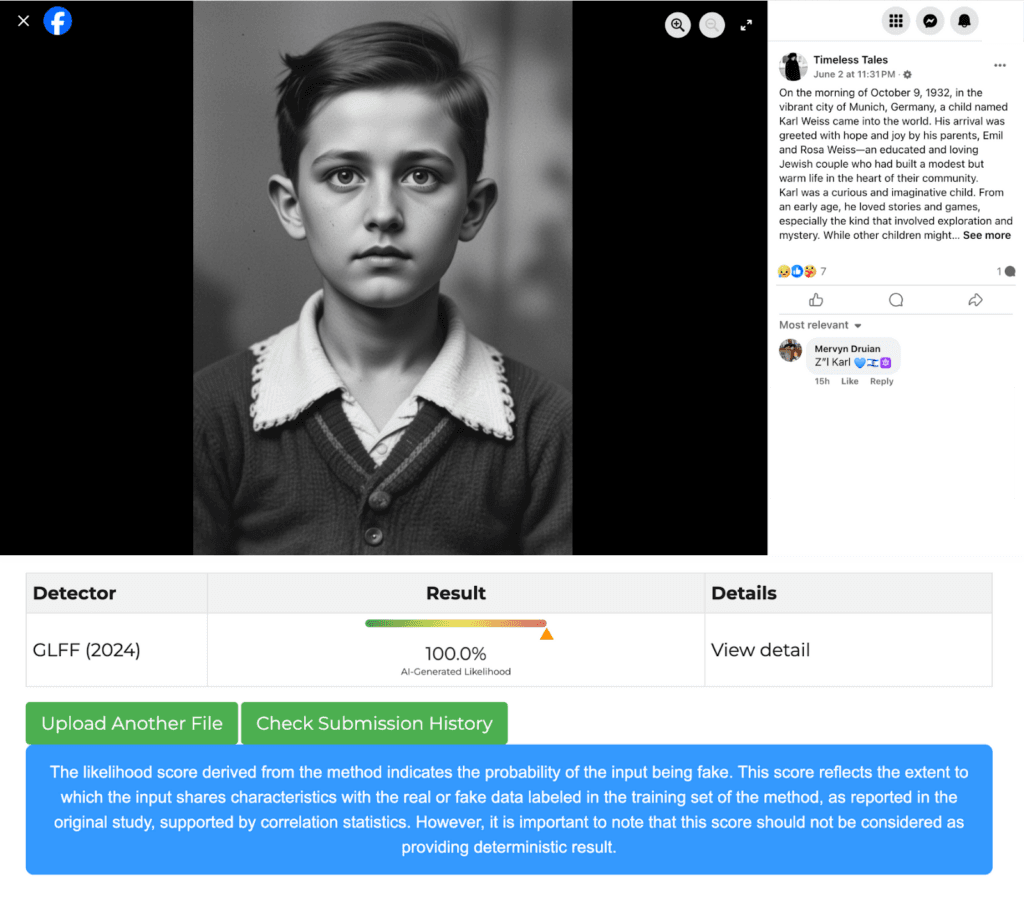

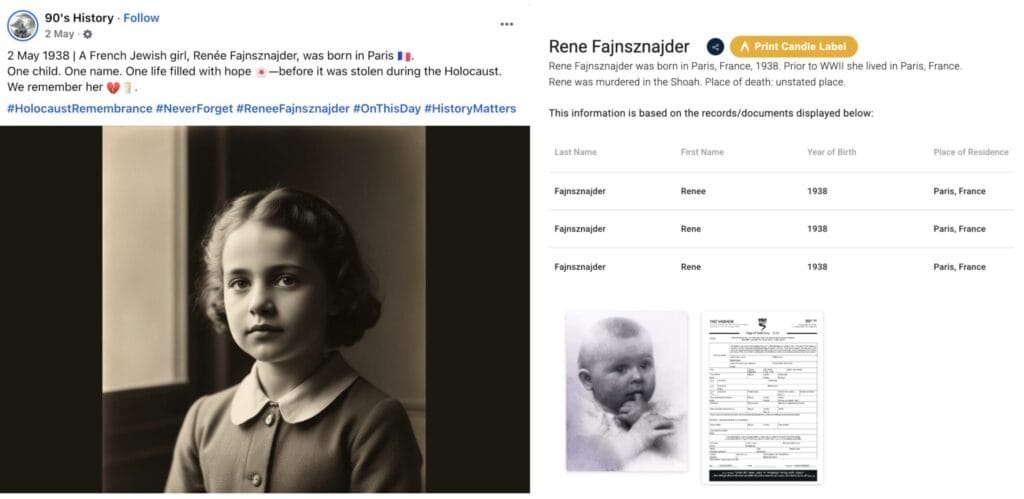

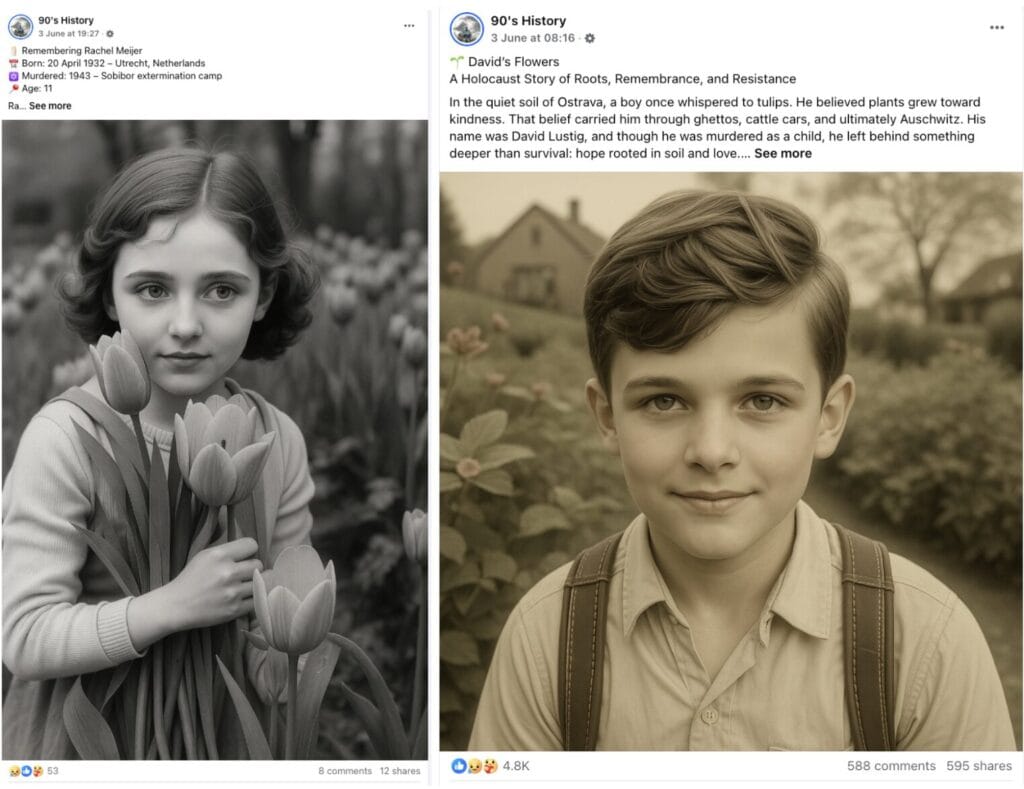

The Newsru.co.il editorial team was drawn to photographs of impeccably beautiful, elegantly dressed children — pictures so perfect that there was no doubt they were generated by neural networks. Journalists then scrutinized the text of these posts and discovered that, in most instances, it too was fabricated. Although the names used often belonged to actual Holocaust victims from the Yad Vashem museum database, the birth dates, places, and circumstances of death were entirely fictional. These posts also included sentimental details—“he played chess with his grandfather,” “she adored her violin,” “sang lullabies to her brother,” and so on — facts too hard to verify.

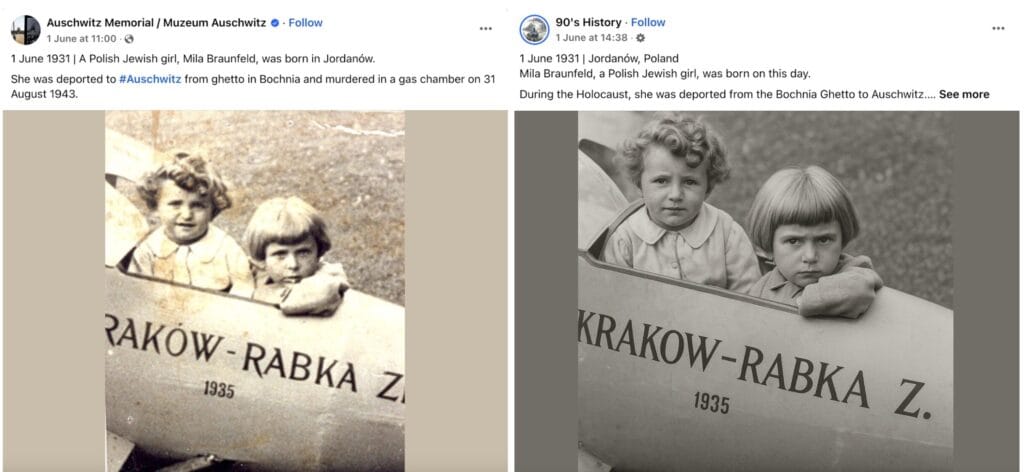

The Israeli journalists did not name the specific pages responsible for such content, but shared this information with “Provereno.” We randomly selected several posts, used AI detectors to analyze the photographs, and confirmed they were AI‑generated. Other findings by our colleagues about the methods behind creating these publications were also confirmed. Notably, the owners of some pages could republish the same image with different captions, or use different portraits to illustrate the same story.

Moreover, we found several posts using real photos of Holocaust victims, previously published by the Auschwitz-Birkenau Museum on its Facebook page. Yet, in those cases, the photos had also been manipulated with AI.

On May 22 — two days after Newsru.co.il’s exposé — a statement appeared on the Auschwitz-Birkenau Museum’s page. The museum expressed concern over the circulation of fictitious biographies and AI‑generated images of Holocaust victims. Museum spokespeople denounced these actions as “falsification of history,” emphasizing that such distortions harm the memory of the real victims. The museum’s deputy press secretary, Pawel Sawicki, pointed out that the spread of such fake materials could aid Holocaust deniers, warning: “If fake victims appear, someone may claim that the rest was fabricated as well.”

The museum’s statement mentioned only one Facebook page — 90’s History — as responsible for such materials. The Newsru.co.il editorial team, our colleagues, examined posts from three different profiles. “Provereno” found nearly a dozen similar public pages. Two of them (“Код 4 5 0” and Ok 30) exclusively post AI-generated content about Holocaust victims; the others (such as the relatively popular 90’s History and Let’s Laugh) mix these with other information, partially or completely fabricated using neural networks, on historical topics.

A network or a coincidence?

The pages discovered by “Provereno” share a remarkably similar content strategy, suggesting a possible link or centralized management. Such schemes aren’t new: one famed example is the so-called Macedonian fake news farms. In 2016, a group of young Macedonians earned thousands of dollars per month by producing sensational news stories to boost Donald Trump’s first presidential campaign. They launched dozens of websites with sensational headlines, drawing in American social media users. Though motivated purely by profit and indifferent to U.S. politics, their operation became a global disinformation hub. A similar (Macedonian as well) fake-news farm was uncovered in 2019, also spreading conspiracy theories.

However, our analysis does not confirm the hypothesis of a single coordinated network. After studying information about the pages, the timing of the first generated photos, the digital footprints of the presumed owners of these pages on Facebook, and other data, we found little common ground. All pages have been renamed multiple times, and were likely managed by different administrators before, some may have even been hacked. For instance, 90’s History page was, until late March 2024, the official profile of the Tennessee State Fire Marshal’s Office (relevant content is still available), while another, Sophia Brown, judging by its original name, was a fitness-related page.

Two pages (90’s History and Historical Snapshots) are allegedly managed by U.S. legal entities Star Groups LLC and Being Master LLC, respectively. The profile owners even passed Meta verification, yet neither company appears in U.S. business registry (raising serious questions about Facebook’s verification process). Historical Snapshots, by the way, although it сlaims to be U.S.‑owned, is run from Myanmar… Other pages, according their ‘Transparency’ tabs, are managed by administrators from various countries, from Australia to Iran. Managers of several pages (such as Timeless Tales, noted by our Israeli colleagues) are from India. Some administrators’ locations are not specified or determined by the social network, and some have left digital footprints — for example, the Past and Proven page is owned by a resident of Nigeria.

These pages began posting Holocaust‑victim‑generated content at different times, but the recent surge started in late April–early May 2025 (likely because Israel’s national Holocaust Remembrance Day fell on April 23 this year). With rare exceptions, the photos, the names taken from the Yad Vashem museum database, and the generated texts were all different. While some stories overlapped, it appears page administrators merely stole successful posts from one another.

So, all these pages are not part of a single network but rather independent ventures that, supposedly, pursue a single goal — making money.

Nothing Personal, Just Business

Each of the pages analyzed by “Provereno” published between 10 and 50 posts per day. Running such a large-scale project — even if the generation of texts and images, as well as their publication, is automated — is a full-time job, hardly something done for the sake of an idea.. Yet the motivation seems to be bare economic gain: these administrators exploit loopholes in Facebook’s content‑monetization system. While “Provereno” couldn’t formally verify whether these pages participate in Facebook’s monetization program—such data isn’t publicly available—numerous indicators strongly suggest they do.

Meta generates nearly all its revenue through ads on Facebook, Instagram, Threads, Messenger, and WhatsApp. In 2024, the company reported $164.5 billion in revenue, over $160 billion of which came from advertising. To encourage content generation, increase ad inventory, Meta launched monetization programs. Starting in August 2017 for horizontal videos, expanded in 2022 to include vertical shorts, then in 2023 introduced a Performance Bonus for text and photo creators, Meta consolidated all programs into the Facebook Content Monetization beta in October 2024. Last year alone, Meta paid out $2 billion to creators.

Payouts are tied to engagement—views, comments, reactions, shares. Experts estimate creators earn around $0.0065 per engagement. With a very large reach, the reward may be calculated at a better rate, but most content creators earn about $10–20 per day. This is very little for residents of developed countries, but the monetization program is also available in the Philippines, India, Colombia, Thailand, Indonesia, and Mexico, where such earnings can be quite attractive for poorer populations. Especially if one of the many posts “goes viral”—for example, this 90’s History publication about Holocaust victims garnered 9,600 likes, almost 1,270 comments, and more than 970 shares and could have brought the page administrator about $77. Even for residents of the U.S. or Western Europe, such income, especially with hundreds of posts per month, can be a decent supplement to a salary.

All the pages studied by “Provereno” were registered or repurposed after the Performance Bonus launch. To participate in the monetization program, you must receive an invitation from Facebook (either directly or after applying). While the eligibility criteria remain opaque, subscriber counts appear to influence selection: experts believe that 10,000 followers were required for the Performance Bonus, though the threshold may have been lowered for the Content Monetization beta. This may explain why some pages with AI‑generated Holocaust stories weren’t newly created but hacked from existing accounts, as they offered pre‑existing follower bases.

The innovations in Facebook’s monetization program coincided with the rapid development of neural networks, which became accessible to a wide range of users. People worldwide gained the ability not only to generate plausible texts and images but also to automate their publication on social networks and websites without specialized skills. For example, one Reddit user wrote in a discussion about Performance Bonus: “I made an automation that generates unique images with inspirational/motivational/emotional quotes using AI. All the images are original and can be with your custom watermark. All the quotes are from a collection of posts that had huge engagement through FB.”

The cost of creating such content is minimal or even near zero — a significant number of models capable of generating more or less “human-like” posts are open-source, can be installed on a computer or in Google Cloud, and then fine-tuned for any task, from generating and publishing Facebook posts to sending phishing emails. If a user is too lazy to figure out AI services, they can use the work of “colleagues”: there are sites where you can find images generated by neural networks or prompts on various topics, including the Holocaust. Finally, at their disposal is Yad Vashem’s open database, where they can find victims’ names, as well as the Auschwitz-Birkenau Museum’s page, where several posts about Holocaust victims are published daily. These posts are easy to analyze, selecting as samples those that received the most engagement. All you have to do is rewrite them using AI, add an illustration, publish, and wait for likes.

There’s no need to spend money on advertising — Facebook’s algorithm itself will show the posts to a large audience. Since 2019, the social network has consistently and systematically reduced the reach and promotion functions available to so-called “publishers” (i.e., media editors and website owners who published links to their materials on Facebook) in favor of user content posted exclusively on the platform. The current algorithm works so that if a user likes, for example, a post from the Yad Vashem museum, soon other posts about Holocaust victims are likely to appear in their feed. And most likely, it won’t be a Yad Vashem publication, even if you’re subscribed to it, but content from another page or group. In 2024, Meta CEO Mark Zuckerberg said that up to 30% of the content that appears in Facebook users’ feeds consists of posts from pages they are not subscribed to but which the algorithm recommends. The quality and reliability of this content are of little interest to company management, unless complaints or scandals arise… Finally, betting on posts about Holocaust victims is a win-win. People react more emotionally to a combination of a visual image and a poignant text, especially when it comes to tragedies and children, and the topic itself resonates among users in countries where death camps operated, World War II battles took place, or large Jewish communities lived or still live. Mindless scrolling slows down at the right place, leaves a like or comment, and then the algorithm helpfully starts feeding similar posts into the feed.

What comes next?

Even when AI content in social networks was limited to inspirational quotes or quirky images like “Shrimp Jesus,” its spread on Facebook already caused serious concern among media and researchers. However, over time, page managers, in pursuit of an audience, became indiscriminate and generated anything that could bring them subscribers, likes, and shares — and thus money through monetization programs. For example, in February 2025, Business Insider tech columnist Katie Notopoulos noted that Facebook was filled with recipes composed by neural networks. And in the fall of 2024, CNN drew attention to AI-generated posts on historical topics.

History interests many people: in the English-speaking segment of Facebook, there are many popular thematic groups with hundreds of thousands of subscribers. Such users became the target audience for the pages analyzed in our review (many of them repost their Holocaust victim publications in relevant groups). Readers actively respond to touching stories about children, Holocaust victims, and bring money to the creators of these posts.

However, while some allegedly satisfy their interest in history and others gain profit from it, other users may use such content for their own (and not always good) purposes. Back in 2024, UNESCO published a report warning that artificial intelligence could pose a threat to Holocaust remembrance. The report stated that abuse of AI could lead to the spread of false and misleading claims on this topic, both due to flaws in the models themselves and because Holocaust deniers would deliberately use them to create content supporting their views. This, in turn, could lead to increased antisemitism and misunderstanding of one of the most important events of the 20th century.

Artificial intelligence is abused not only by users exploiting monetization programs but also by those advertising their goods and services on social networks. Recently, fact-checkers from the Italian project Open noticed that viral photos of a girl allegedly fighting with the Ukrainian Armed Forces and therefore forced to celebrate her birthday at the front in tears appeared on Facebook and X. It turned out that they were originally posted in profiles advertising online stores selling T-shirts.

The problem is that social networks are not interested in limiting users who spread AI-generated false information. The more content on platforms, the more time people spend there, and the more opportunities to show ads and get revenue. By some estimates, nearly every fourth post published on Facebook from November 2023 to November 2024 was written not by a human but by artificial intelligence. At the same time, Meta’s revenue (which, as mentioned, is almost entirely from advertising) grew by 22% last year compared to the previous year.

Under public pressure and political circumstances, social network owners could introduce mechanisms to curb the spread of false information, including AI-generated texts, photos, and videos. For example, Meta once partnered with independent fact-checking organizations to launch a fact-checking program, but in 2025 (again for political reasons) it was terminated—so far only in the U.S., but soon it may cease to operate in other countries as well. The program, which employed hundreds of professionals from different countries, is planned to be replaced by special comments from ordinary users, following the Community Notes principle in X. In the U.S., this system is already in place, but none of the several randomly selected viral “Provereno” posts about children — Holocaust victims had such notes attached. Even experts who are positive about this approach write about its vulnerability to trolls, coordinated information attacks, and ordinary human biases. Disinformation researchers and fact-checking specialists warn: the consequences of these changes will be much more significant and threatening.

Meta’s press office did not respond to “Provereno’s” requests for comment at the time of publication.

Read more:

- Lead Stories Goes To Brussels: Statement Made During Public Hearing Of European Parliament 'Democracy Shield' Committee

- Ilya Ber. Fakebook. What’s Wrong with Zuckerberg’s Decision to End the Fact-Checking Program

- Pavel Bannikov. Mark, Musk, and the Freedom of Lies

Если вы обнаружили орфографическую или грамматическую ошибку, пожалуйста, сообщите нам об этом, выделив текст с ошибкой и нажав Ctrl+Enter.